Kevin Vasconi, chief information officer of R.L. Polk & Co., saw the future. And it made him feel a little sick.

In the fall of 2004, Vasconi was meeting with other top executives of the company, one of the largest providers of marketing data to automobile manufacturers, in the boardroom of its suburban Detroit headquarters—in the heart of the U.S. auto industry.

It was a state-of-the-company gathering to discuss Polk’s strategic direction. And the consensus was that its information systems wouldn’t be able to support the business into the next decade. “If you have that discussion honestly,” Vasconi says, “it will scare the crap out of you.”

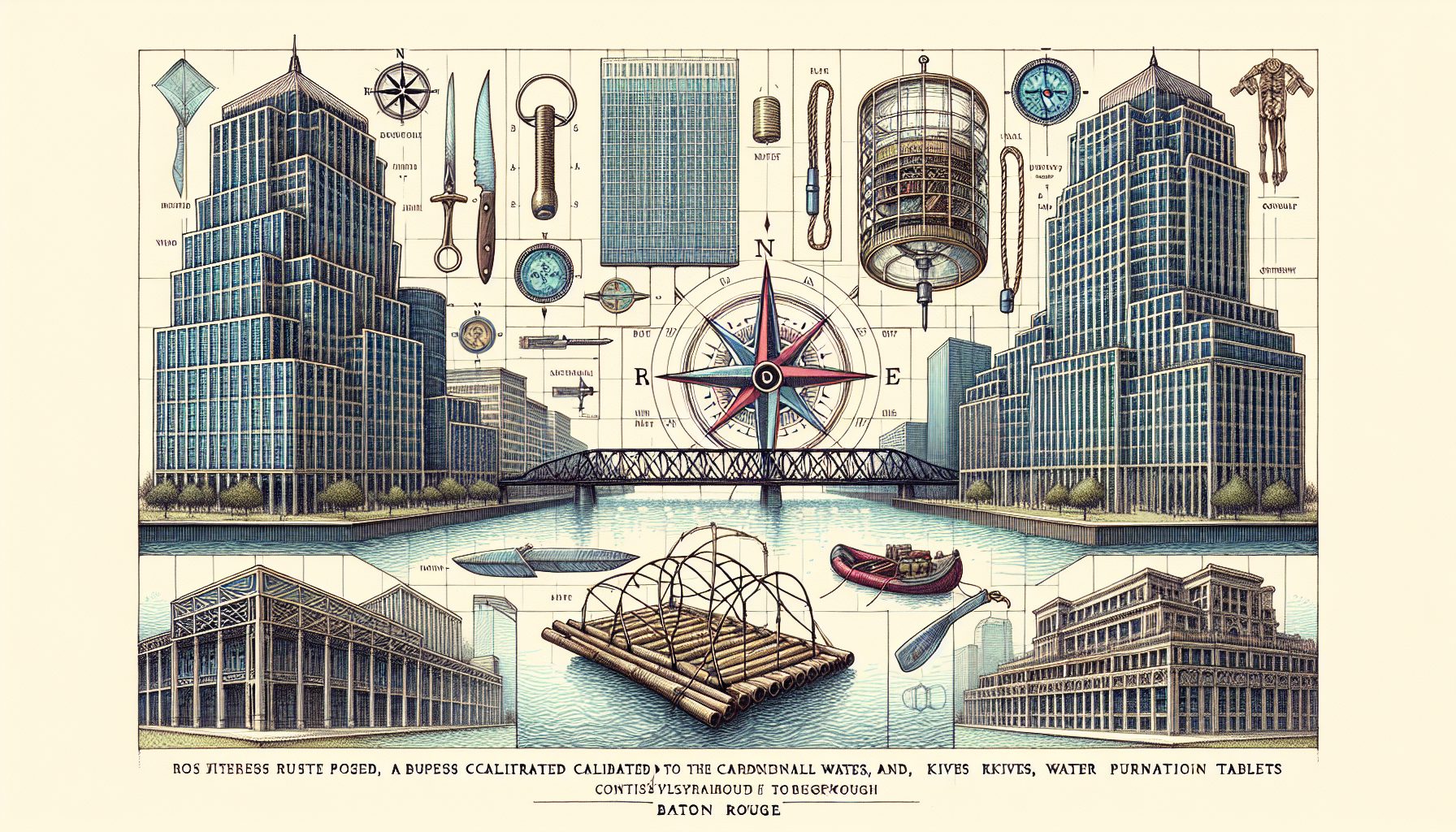

The Southfield, Mich.-based company’s business, at its core, is data aggregation. Polk compiles vehicle registration and sales data from 260 sources. These include motor vehicle departments in the U.S. and Canada, insurance companies, automakers and lending institutions. The company then repackages that data and sells it to dealers, manufacturers and marketing firms—anyone who wants detailed information about car-buying trends, such as the top-selling SUV for a particular ZIP code.

For years, Polk’s process of consolidating data ran on IBM mainframes. By the time Vasconi joined the company in 2003, portions of the software were 20 years old. “Some of the people working here are younger than the code,” he says.

The mainframe system wasn’t broken, per se. But the entire process was engineered around the batch-processing operations of a mainframe, in which multiple computing tasks are queued before they’re processed in order to maximize mainframe resources. Vasconi believed newer technologies could speed up delivery of data to customers—by processing data as soon as Polk received it, instead of in daily or weekly batches—and lower the company’s costs by automating tasks that were handled manually.

Vasconi also worried that the old system couldn’t keep pace with the proliferation of data. Polk’s entire database already comprises more than 1.5 petabytes (1.5 quadrillion pieces of data), and historical trends indicate it will continue to grow even faster. “We knew we had a capacity issue, and that getting the value out of the data would be a challenge for the company because of the sheer volume,” he says.

Customers, meanwhile, have been champing at the bit to get sales data more quickly. Paul C. Taylor, chief economist for the National Automobile Dealers Association, which represents 19,700 car and truck dealers, says Polk’s vehicle registration data by state is typically available 30 days after carmakers release their national sales data. That prevents dealers in, say, New Jersey from immediately comparing trends in their area with those nationwide and adjusting inventories accordingly.

“In a perfect world, you’d have the state breakdown when you have the national sales figures,” he says. “But if [Polk] could take even a week off the cycle, that would be a vast improvement.”

Actually, Polk had tried twice before to move off the mainframe, but those projects ended up being scaled back. “It’s the mother of all databases for automotive intelligence,” says Joe Walker, president of Polk Global Automotive, the division of the company that sells data to businesses. “It seemed too daunting a task to try to move it.”

Company executives took a different tack with a project code-named ReFuel. In late 2004, Polk created a new company, called RLPTechnologies, to build the next data aggregation system. The subsidiary is 7 miles from Polk’s campus at a building in neighboring Farmington, Mich. It has a full-time staff of 30, and at the peak of development last year employed 130 contractors, including consultants from Capgemini.

“We wanted to free up the people who were going to build the next generation of what is, quite honestly, our cash cow,” says Vasconi, who is also president of RLPTechnologies.

Walker acknowledges that the expected cost of the project, which ended up exceeding $20 million, caused some trepidation. It was a huge undertaking for the private company, whose annual revenue is estimated to be around $275 million. “Right from the beginning, we were concerned with whether we’d see the ROI [return on investment] on this,” he says.

Polk expected the ReFuel project to save money. But only later did Walker and Vasconi confirm that it helped chop Polk’s costs for data operations management nearly in half.