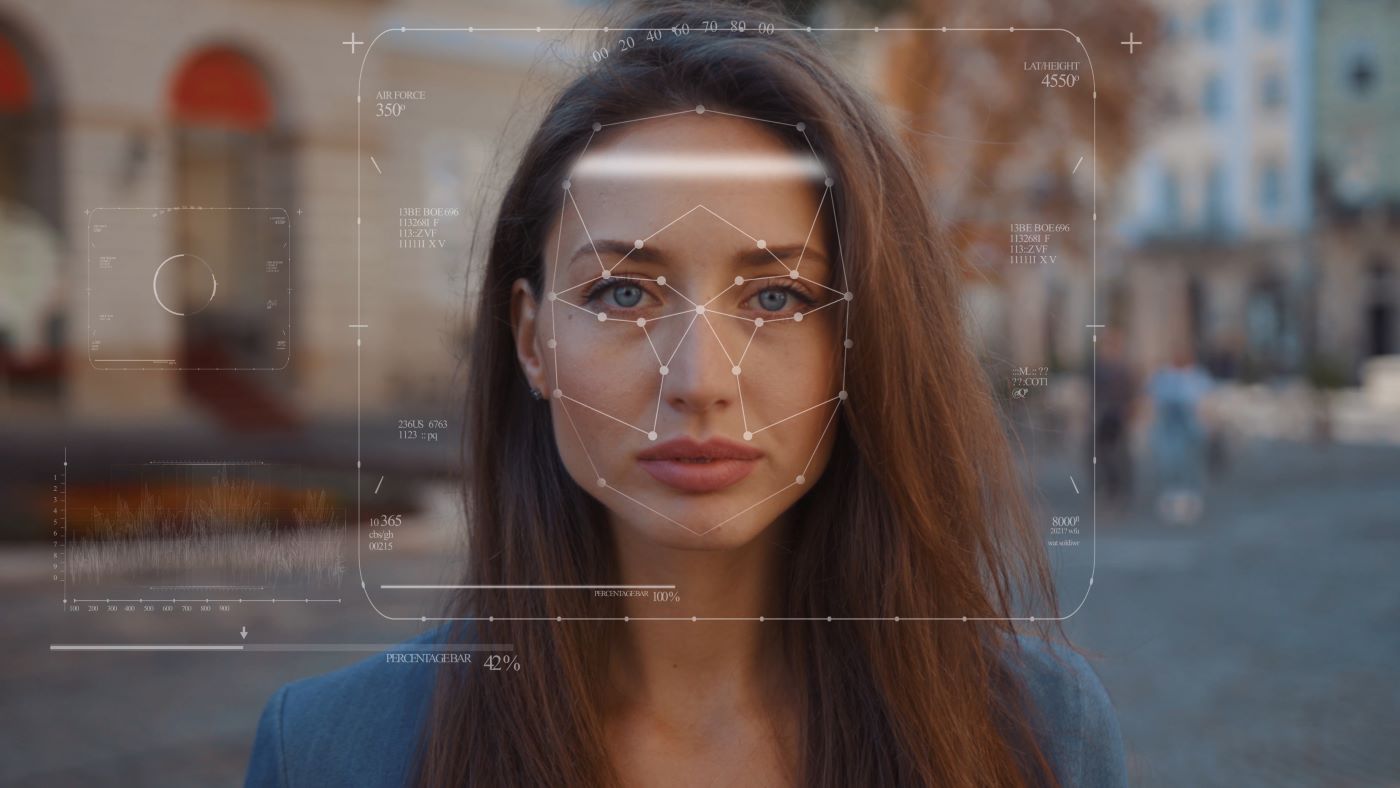

If you’ve watched any of the long-running crime dramas like CSI or NCIS, you’ve likely seen facial recognition software lauded as a huge breakthrough in law enforcement technology. However, this facial recognition software is now widely available to the public, and it may not be the blessing that some think it is. While facial recognition AI can do some good, it can also do a lot of harm if it’s not handled in the right way. Let’s take a look at the good, the bad, and the ugly uses of facial recognition technology.

Facets of facial recognition technology

Problems with facial recognition tools

While facial recognition technology could one day be great, it currently has several major issues that developers need to work out.

Accuracy

Unfortunately, facial recognition software just isn’t accurate across race and gender lines. While the maximum error rate when identifying light-skinned men is only 0.8 percent, the error rate for identifying dark-skinned women can be as high as 34.7 percent. That means more than one out of every three women of color are misidentified by facial recognition tools. And when organizations are using facial recognition technology to make major decisions, like staffing, lending, or sentencing, this level of inaccuracy is unacceptable.

Bias

It’s probably not a coincidence that black women get misidentified so often by facial recognition software. Racial and gender bias are huge problems within our society, and the training that AI models receive tends to exacerbate them. Although normally unintentional, developers might train AI facial recognition software with more photos of light-skinned people than dark-skinned, automatically making the platform better at identifying light-skinned people. Alternatively, engineers might train the system based on historical data, which for hiring decisions, might lead it to favor men over women.

Also read: Using AI to Promote Diversity in the Tech Industry

Privacy

Understandably, many people have concerns about what facial recognition technology would mean for their privacy. Countries like China, for example, have already shown their willingness to track and monitor their citizens with a combination of facial recognition and security cameras, even for non-criminal acts.

And while these practices come with their own issues, a bigger concern comes from what happens when that data leaks. The SolarWinds hack last year (and several of the major ransomware attacks since then) showed that government entities aren’t as secure as people would like to think, and all of that biometric data in the wrong hands could lead to major identity problems across the globe.

Good uses for facial recognition technology

Facial recognition isn’t all bad, however. When used correctly, it can have some positive outcomes for society as a whole.

Providing leads for law enforcement

Criminal investigations don’t always produce high-quality leads, especially in the beginning. Facial recognition technology can give law enforcement officers a starting point if there is footage of the crime scene or the surrounding areas. This may provide them with a list of potential witnesses.

However, if law enforcement agencies are going to use facial recognition in this way, they need to be mindful of the problems with accuracy. They should only use this kind of technology to help them find leads, not to convict suspects.

Identity and privacy control

Apple introduced its Face ID feature back in 2017, allowing users to unlock their phones with facial recognition. They’ve made this a secure option by converting the biometric data into a number, which is the only thing that’s stored on the user’s device. This way, if a hacker were able to get into the phone’s data, they wouldn’t actually have access to the facial recognition information. Overall, most users feel that the system is accurate and fast, although it can be easy to fool or force.

Also read: Is Biometrics Really Better?

Bad uses for facial recognition software

Despite good intentions, facial recognition software has some ill-conceived uses, mostly due to the current limits of the technology.

Background checks

Many workplaces require background checks before they’ll hire an applicant, and some are starting to use facial recognition as a part of that. Evident ID is a company that’s making this possible by comparing applicant-provided images with government documents and online databases. But facial recognition isn’t always accurate, and people change their appearances all the time for non-nefarious purposes. People cut and dye their hair, they don’t wear makeup, or they wear it differently than they did in their ID photos. All of this could lead to inaccurate results.

Connecting unknown faces and names

With facial recognition widely available, people could take a photo of someone they don’t know to find their name and other information. Normally this would fall into the ugly category, but there are potential situations where the intentions behind this could be good. For example, let’s say someone notices a child in public that closely resembles a missing poster. They could snap a quick photo and upload it into the service to check and then notify the authorities if necessary.

In all likelihood, however, facial recognition software won’t have a ton of training with children’s photos, so it may not provide accurate results. Additionally, it’s more likely that people who will use facial recognition software in this way will do so for unsavory purposes, like robbing or stalking.

Ugly uses for facial recognition technology

The ugly truth about facial recognition technology, and any technology that has privacy concerns, is that there are people out there who will use it for problematic purposes.

Stalking

There are several nonprofit organizations, like Connections for Abused Women and their Children (CWAC), dedicated to helping victims of abuse escape their abusers. However, making facial recognition technology widely available could undo all of their work by allowing abusers to upload photos of their victims and find current information about them. Additionally, facial recognition can further enable stranger stalking by allowing stalkers to upload photos of their victims and find their online profiles to learn more information about them.

Basing court cases on it

While facial recognition can provide leads for law enforcement officers, some prosecutors are using the technology in court to convict suspects. The problem is, facial recognition software isn’t completely accurate, especially when attempting to identify people of color. If facial recognition is the sole evidence for convicting someone of a crime, it’s very possible the wrong person could be put in prison.

Protecting against bad and ugly uses of AI facial recognition

Facial recognition has good applications, but there’s a long way to go before it’s ready for public consumption. It needs more training with photos of both women and people of color, and there have to be more secure ways to store the data. Additionally, there need to be opt-out clauses for publicly available tools to allow abuse and stalking victims to protect themselves. It will likely be several more years until this technology is ready for wider use.